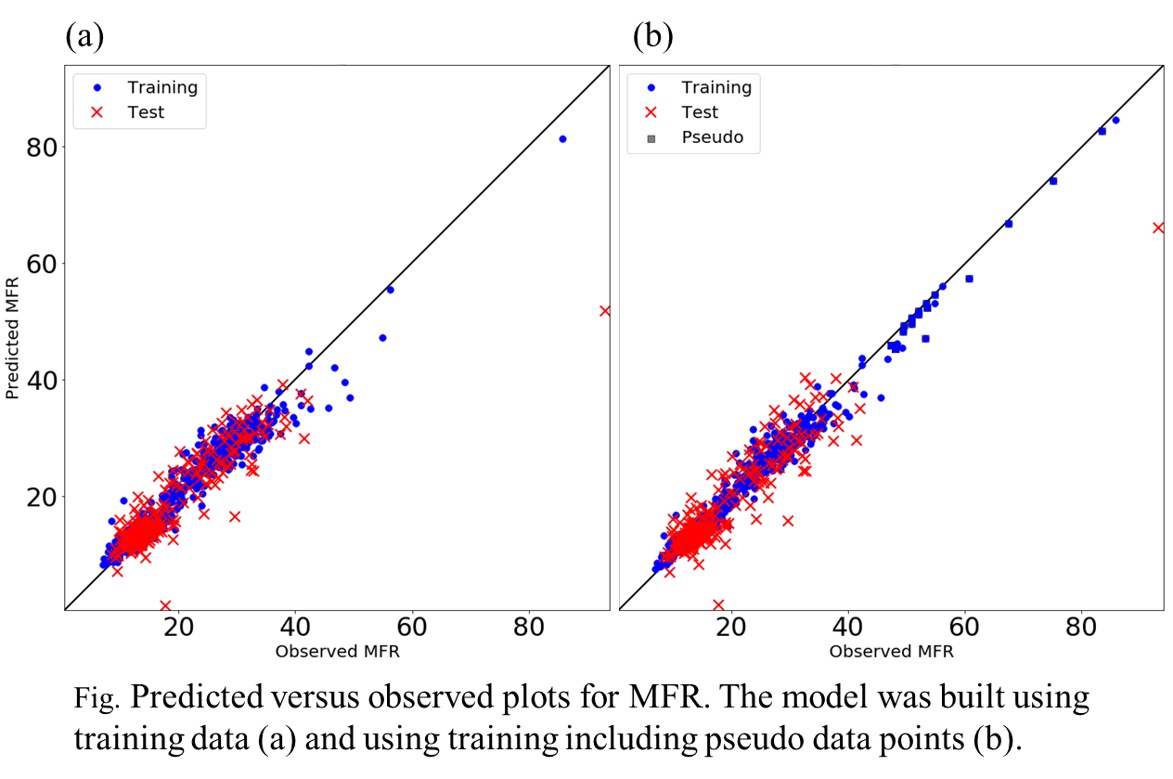

Nowadays, AI and IoT have become widespread, and demand for semiconductors has increased; therefore, reduction of costs and improvement of production efficiency are needed in producing a single crystal silicon ingot which is a key material of semiconductors. A 300 mm diameter single crystal silicon ingot is mainly produced by the Czochralski (CZ) method. To keep high product quality, the crystal radius and the crystal growth rate must be controlled precisely by manipulating the heater input, the crystal pulling rate, and the crucible rise rate. Expecting that model predictive control would be useful to realize precise control, we constructed the gray-box model of an industrial 300 mm CZ process in our past research. To predict the control variables using the gray-box model, it is necessary to calculate the initial values of the melt temperature and the heater temperature, which are not measured in the industrial CZ process. When the initial values were determined by minimizing the error of the controlled variables between the measured values and the calculated values using the gray-box model just before the prediction period, the prediction accuracy at the end of the pulling process was lower than the other period. In the present work, we proposed the method of estimating the initial values by the statistical model, which was built with the data of past batches. Moving window partial least squares was used to develop the statistical model since it can cope with the time-varying characteristics of the CZ process, which originate from changes in the crystal length and the crucible position. The prediction accuracy was validated using the data of two batches, and the results showed that the proposed method could reduce the prediction error on average by 24% in comparison with the conventional method of using only the data of the current batch.

Soft-sensors were commonly used by engineers to predict quality variables that can only be infrequently sampled using sensors readings that are continuously available. Soft-sensor models can be data-driven or model driven. Data-driven models using non-parametric models such as artificial neural network are simple to develop given sufficient data. However, there are usually concerns about the extrapolation ability of the model beyond the data range. Model-based soft-sensor usually requires a physical model and an observer algorithm such as Kalman filter to estimate hidden state variables that cannot be directly measured. However, the effort required to develop a physical model is usually quite cumbersome. The recent development of deep learning opens up the possibility of development of more complex data-driven models that may be able to capture the actual system dynamics. For example, recurrent neural networks can be cast into a sequence to sequence (Seq2Seq) conversation models that can predict subsequent conversions based on past speech. In this work, such a Seq2Seq model formulated into an observer-predictor model that allows identification of hidden states of the system based on past actions and outcomes, and predict future outcomes based on current and future actions. The model is applied to quality predictions during mode-transition for the Tennessee Eastman Process. We found that after learning a few mode-transition data, the model could predict mode-changes that have not been encountered. An industrial distillation column separating c4/c5 product was also studied. Results show that not only the model could successfully predict quality variables in the product stream, but also has much better gain consistency than a simple back-propagation network.

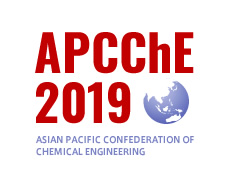

We propose an innovative monitoring method that can estimate the water content of granules by using only process parameters (PPs) obtained through standard instruments, e.g., thermometer and hygrometer. Thus, no investment is required to install specialized equipment such as a near-infrared (NIR) spectrometer, which is a common tool for process analytical technology (PAT). In addition, the proposed method is scale-free; the water content can be estimated with accuracy, regardless of the manufacturing scale, by selecting scale-independent PPs on the basis of variable importance in the projection (VIP) of partial least squares (PLS). The results of experiments have demonstrated the followings: 1) the prediction accuracy of the developed method is equivalent to a NIR spectra-based method commonly used in the pharmaceutical industry, 2) the developed method is robust against changes in manufacturing scale, and 3) the prediction accuracy of locally weighted partial least squares (LW-PLS), which can cope with both collinearity and nonlinearity, is significantly higher than that of PLS. The developed method is a powerful tool for scale-up study in batch processes because it enables scale-free monitoring for the water content of granules during fluid bed granulation at lower cost without additional investment. The developed method is expected to enhance the implementation of real-time monitoring of fluid bed granulation processes as a cost-effective alternative to the existing NIR method.

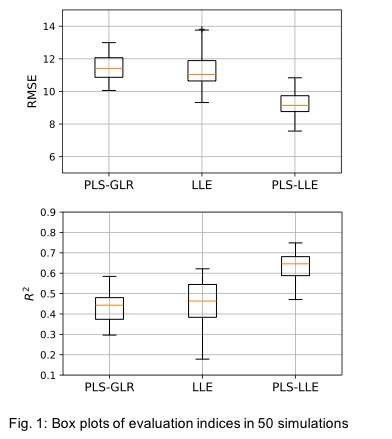

When designing a soft-sensor, we have to determine its parameters such as the number of latent variables of partial least squares (PLS) model. The parameters are usually selected to minimize the cross validation error, however, a soft-sensor model with one set of parameters should not cope with all the operation conditions. In such a case, the estimation error for some samples becomes large. In this research, to reduce the estimation error for those samples, the adaptive soft-sensor design method is proposed. In the proposed method, the leave-one-out cross validation error is calculated using the all the model construction samples. Then the samples with large estimation error are identified, and the model construction samples are divided into three groups. For each group, the parameters are optimized to minimize the leave-one-out cross validation error for the group. In addition, linear discriminant analysis (LDA) is conducted to find the discriminant axis in the input variable space. If the classification performance of LDA is not enough, kernel LDA is used. In online procedure, each of the model validation samples are classified into one of the three groups, and the parameters are adaptively selected. By using the selected parameters, the soft-sensor is developed and the output estimate is calculated. The usefulness of the proposed method was confirmed thorough its application to twelve industrial process data. The root mean square error (RMSE) was improved at most by 11.8%, and at least the same as the RMSE of conventional method using only one set of parameters.

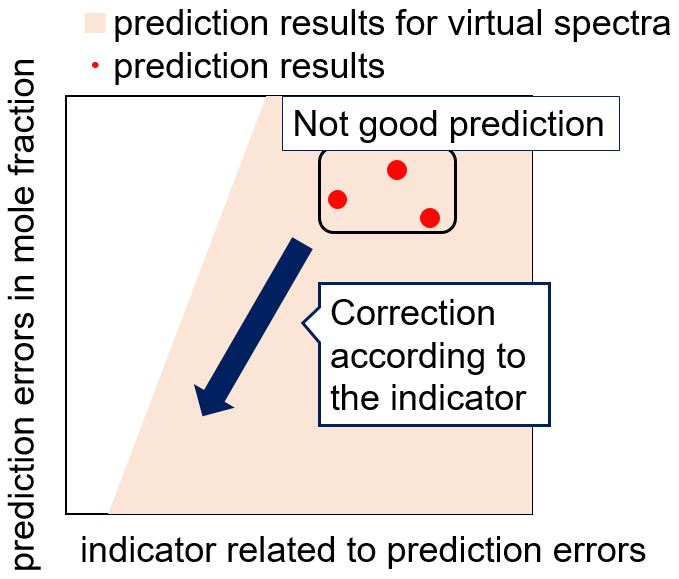

Continuous manufacturing (CM) in the pharmaceutical industry has been paid attention to, because it is expected to reduce the costs of manufacturing. One of technical hurdles in continuous manufacturing is establishment and maintenance of predictive models for process monitoring. Conventionally, calibration models with optic spectra such as infrared or Raman spectra have been used as the predictive models for process monitoring. The calibrated models predict product qualities such as active pharmaceutical ingredient's content, moisture content, particle size, and so on. However, any changes in rots, ratio of ingredients, or operation conditions may affect the relationship between sensor information and the product qualities, which results in deterioration of predictive models. Operators must update calibration models to assure predictive accuracy; however, calibration always requires data acquisition. Thus, the use of calibration models intrinsically increases economical costs. To tackle this problem, the authors have been attempting to propose a calibration-free approach with infrared spectra, which employs an equation in physics. To apply the calibration-free approach to real processes, it is important that a model provides accurate and reliable prediction. In this study, we propose a method to improve predictive accuracy of a calibration-free approach after assessing predictive errors using a rational indicator. We verified that the update method succeeded in non-ideal binary mixtures.

As the most important component of China's process industry, the refining and chemical industry is not only the pillar industry of economic and social development, but also an important cornerstone of the real economy. After decades of development, China's refining industry's production capacity has ranked second in the world, and has made great progress in product quality, cleanliness, supporting technology and process automation. With the rapid improvement of computer software and hardware, the development of machine-learning models and algorithm, the process industry has entered the era of big data. Also, with the impetus of the " Made in China 2025 " national strategy, intelligent refining related construction has been put on the agenda.

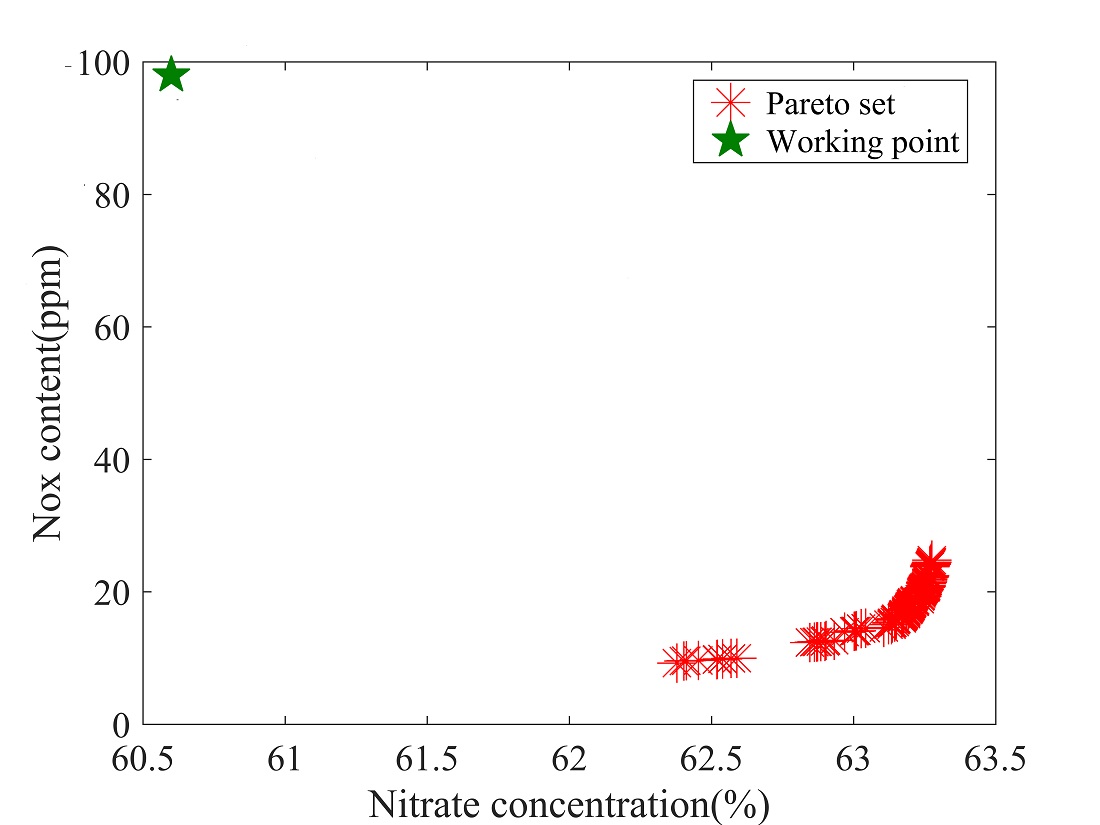

Modelling is the core of intelligent refining. The main feature of process industry contains high complexity, high dimensional and nonlinear characteristics, and so on. Centralized modeling will cause problems such as poor model performance. Therefore, system decomposition technology is built on model-based large-scale industrial process control strategies play an important role. EMD (Empirical Mode Decomposition) is an adaptive decomposition method proposed by Huang (1998) et al. for nonlinear processes. It has been effectively applied in different industrial fields and its effectiveness has been verified. CEEMD (Complete ensemble empirical mode decomposition) is an improved method of EMD. By adding two sets of white noise signals with opposite positive and negative, phenomenon of modal aliasing could be solved to some extent, and more multi-scale information in complex industrial processes can be better separate and identify.

CEEMD method is used to decompose the industrial FCC device in this work, and a soft sensor model is established at each sub-scale to capture more sub-scale information to achieve better overall measurement behavior. By comparing the results of decomposition modeling and centralized modeling, it can be found that the decomposition modeling method has advantages in measurement accuracy and better generalization performance.

Optimal control theory is applied to determine Pareto-optimal fronts for multi-objective optimization problems for seeded batch crystallization processes. A transformation of variables first suggested by Hofmann and Raisch (2010) is used to determine nearly-analytical expressions for the optimal supersaturation trajectory at each point on the Pareto front. A simple crystallization kinetic model for potassium nitrate crystallizing from water is used to illustrate the method.

Four sets of objective functions are considered in this work. Result show that if one objective is based on higher moments (e.g. the third moment of nucleated crystals) while the other is based on lower moments (e.g. the number mean crystal szie), the Pareto-optimal front is relatively wider, indicating significant competition between the two objectives. This is consistent with the conclusion of previous work (Tseng and Ward, 2017). In these cases, a constant growth rate trajectory may represent a good trade-off between two objectives. By contrast, if both objectives are based on higher or lower moments, the trade-off between the two objectives is less significant and the optimal trajectories for each single objective are similar.

This work demonstrates the inherent trade-off between objective functions in batch crystallization process and offers guidance for determining the “best” operating recipe when more than one objective is important. This understanding can facilitate the design of batch crystallization recipes.

References

Miller, S. M., Rawlings, J. B. (1994). Model identification and control strategies for batch cooling crystallizers. AIChE Journal, 40, 1312–1327.

Hofmann, S., Raisch, J. (2010). Application of optimal control theory to a batch crystallizer using orbital flatness, 16th Nordic Process Control Workshop, Lund, Sweden, 25–27 August 2010.

Tseng, Y. T., Ward, J. D. (2017). Comparison of objective functions for batch crystallization using a simple process model and Pontryagin's minimum principle. Computers & Chemical Engineering 99, 271-279.

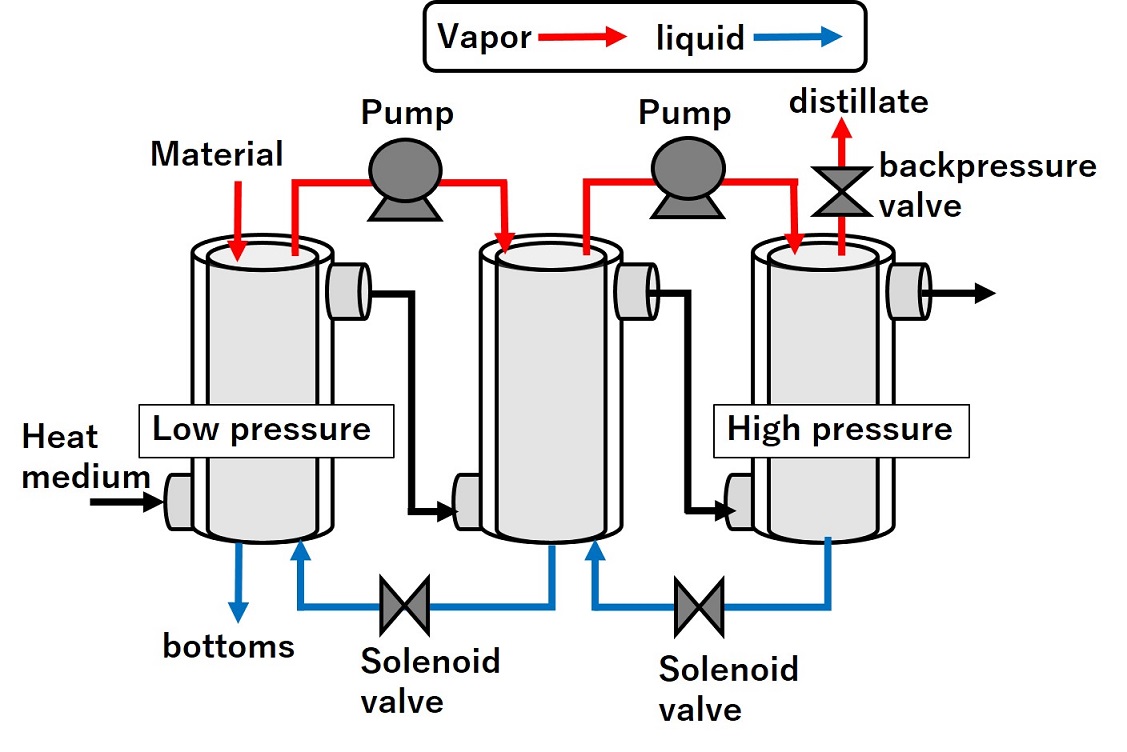

Despite the fact that the modern chemical plants are becoming more complex than they used to be, their operating procedures are still synthesized manually. Since this practice is laborious and error prone, it is desirable to develop a viable approach to automatically conjecture a sequence of executable actions to perform various tasks in realistic processes (Li et al., 2014). In this work, the automata (Cassandras and Lafortune, 2008) are adopted to model all components in the given system. To facilitate efficient procedure synthesis, the entire operation is divided into distinguishable stages and the intrinsic natures of each stage, e.g., stable operation, condition adjustment, material charging and/or discharging, etc., are then identified. The control specifications of every stage are also described with automata to set the target state, to create distinct operation paths, to restrict operation steps to follow the preferred sequences and to avoid unsafe operations. A system model and the corresponding observable event traces (OETs) can then be produced by synchronizing all aforementioned automata. The corresponding operating procedures can be formally summarized with sequential function charts (SFCs) according to these OETs. ASPEN Plus Dynamic has been used to verify the correctness of these SFCs in simulation studies. Since more than one procedure may be generated, these simulation results can also be adopted to rank them based on operability, safety and other economic criteria. Three examples, i.e., a semi-batch reaction process and the startup operations of continuous flash and distillation processes, are reported to demonstrate the effectiveness of the proposed synthesis strategy in practical applications.

References:

Cassandras, C. G., Lafortune, S., Introduction to Discrete Event Systems, 2nd edition, Springer, New York, NY, USA, 2008..

Li, J. H., Chang, C. T., Jiang, D., Systematic generation of cyclic operating procedures based on timed automata. Chem. Eng. Res. Des. 92, 139 – 155, 2014.

Gaussian process regression (GPR) has been gaining popularity due to its nonparametric Bayesian form. However, the traditional GPR model is designed for continuous real-valued outputs with a Gaussian assumption, which does not hold in some engineering application studies. For example, causal analysis of defects in steel products is to discover the relationships between a set of process variables and the number of defects, which is the count data output; the Gaussian assumption is invalid and the GPR model cannot be directly applied. Generalized Gaussian process regression (GGPR) can overcome the drawbacks of the conventional GPR, and it allows the model outputs to be any member of the exponential family of distributions. Thus, GGPR is more flexible than GPR. However, since GGPR is a nonlinear kernel-based method, it is not readily accessible to understand the effect of each input feature on the model output. To tackle this issue, the sensitivity analysis of GGPR (SA-GGPR) is proposed in this work. SA-GGPR aims to identify factors that exert higher influence on the model output by utilizing the information from the partial derivative of the GGPR model output with respect to its inputs. The proposed method was applied to a nonlinear count data system. The application results demonstrated that the proposed method is superior to the PLS-Beta, PLS-VIP, and SA-GPR methods in identification accuracy.

Plant alarm system notifies an operator of plant state deviations. A poor alarm system might cause sequential alarms, which are triggered in succession by a single root cause in chemical plant. The sequential alarms reduce the capacity of operators to cope with plant abnormalities because critical alarms are buried under numerous unimportant alarms. In this paper, we propose an identification method of sequential alarms in time stamped alarm data. The time stamped alarm data, which is composed of the occurrence alarm tags and time, is generally logged in a plant operation data. Repeated alarm occurrence patterns in plant operation data can be regarded as sequential alarms. The proposed method formulates the problem of identifying sequential alarms in plant operation data as the problem of mining repeated similar alarm subsequences. The identified sequential alarms help engineers to decrease the number of unnecessary alarms more effectively. The usefulness of the method is proved by a case study of an azeotropic distillation column.

In biopharmaceutical drug product manufacturing, establishing and maintaining a sterile production environment after a product change-over is essential to guarantee the product quality. Inside an isolator, both machine exterior and interior surface require intensive cleaning/disinfection, achieved through hydrogen peroxide decontamination, and clean-in-place (CIP)/sterilization-in-place (SIP), respectively. Numerous sensors are available to constantly monitor the process and provide real-time measurements of process variables. Generally, the stored sensor data is only used for backtracking actions. In our work, we use this stored data sets for establishing a predictive monitoring system of the process and performing early failure detection. The first step includes data preparation and data classification to failed and successful runs. Followed by the boundary definition of successful runs, i.e., “Golden Zones” and the prediction of the complete process performance following a few minutes of operation. The prediction is achieved through the application of machine learning algorithms, such as Random Forest and k-Nearest Neighbor. The final step compares and assesses the distance of the prediction to the “Golden Zone” boundaries to guide the decision-making process by the operators. We further explore opportunities to improve the machine learning algorithms to achieve the earliest possible failure detection points, i.e. to maximize the “time distance to the alarm”. This could be especially helpful in the case where failures can be detected before the introduction of the hydrogen peroxide to avoid the need to repeat the aeration process which is the most time-consuming step in the procedure.

Our approach was applied to a hydrogen peroxide decontamination process of an industrial filling line resulting in a 50% reduction of the number of erroneous runs to be repeated leading to significant operational time and financial savings.

In process industries, fault detection and diagnosis (FDD) are the first and the most important steps of uncovering abnormal situations and conducting timely corrections. For decades, many FDD methods have been developed and they can be briefly categorized into data-driven and model-based approaches. Recently, combining different kinds of methods to create hybrid approaches that could overcome disadvantages of individual methods has become an attempting research topic. In previous work, we had already achieved better fault detection performance by combining data-driven method with process knowledge. In this work, we expand this idea to create a hybrid fault diagnosis approach, by combining the use of principle component analysis (PCA), qualitative reasoning, and process-knowledge. PCA is utilized as the detection tool in this approach. Meanwhile, qualitative reasoning is used for diagnosis because it has the ability to estimate process behavior with limited and uncertain information, mimicking fault reasoning activity of expert engineers. Process knowledge are important operating information, such as mass or energy balance, non-measured process parameters, and controlled/manipulated variable ratio in a control loop. In this approach, process knowledge is enhanced into both PCA fault detection and diagnostic qualitative reasoning as an effort to achieve better FDD performance.

This approach is tested on Tennessee Eastman Process. Before the test, target process is divided into several units of operation to construct qualitative unit model, and most importantly, to extract operating information using process knowledge. The equations describing these process knowledge are established both quantitatively and qualitatively. The quantitative equations are used for detection propose in the same way as our previous work. The qualitative knowledge equations, as well as qualitative unit models, are represented in consistency matrices for diagnosis propose. As a result of online sample testing, this approach achieved better fault diagnosis effectiveness in comparison with traditional PCA-contribution plot method.

This study investigated the fault diagnosis performance of the entire and early detections for complicated chemical processes using the time-series recurrent neural networks (RNNs). The investigation included various layers and neuron nodes in RNNs using lean and rich training datasets and compared these RNNs with the artificial neural networks (ANNs). Further, the mechanism of classification of the RNNs was revealed in this study. The benchmark of the Tennessee Eastman process was used to demonstrate the performance of the recurrent neural network-based fault diagnosis model. The results showed that the ANNs and the RNNs required only a single hidden layer to reach their maximum accuracy, regardless of whether they use lean or rich training datasets. It was also observed that the ANNs required a higher number of the nodes than the RNNs.

Furthermore, the accuracy of the RNNs using the lean training dataset was equivalent to that of the ANNs using the rich training dataset. The variant RNN can distinguish between the fault types using the rich training dataset. We discovered that the classification mechanism of the ANNs was a priori classification, which was incapable of separating the fault types having similar features. RNNs did not have this limitation, as they were not a priori classification. In summary, the RNNs demonstrated a better performance with regard to the fault diagnosis in chemical processes than the ANNs, and were effective in classifying the fault types with subtle features when there is sufficient data.

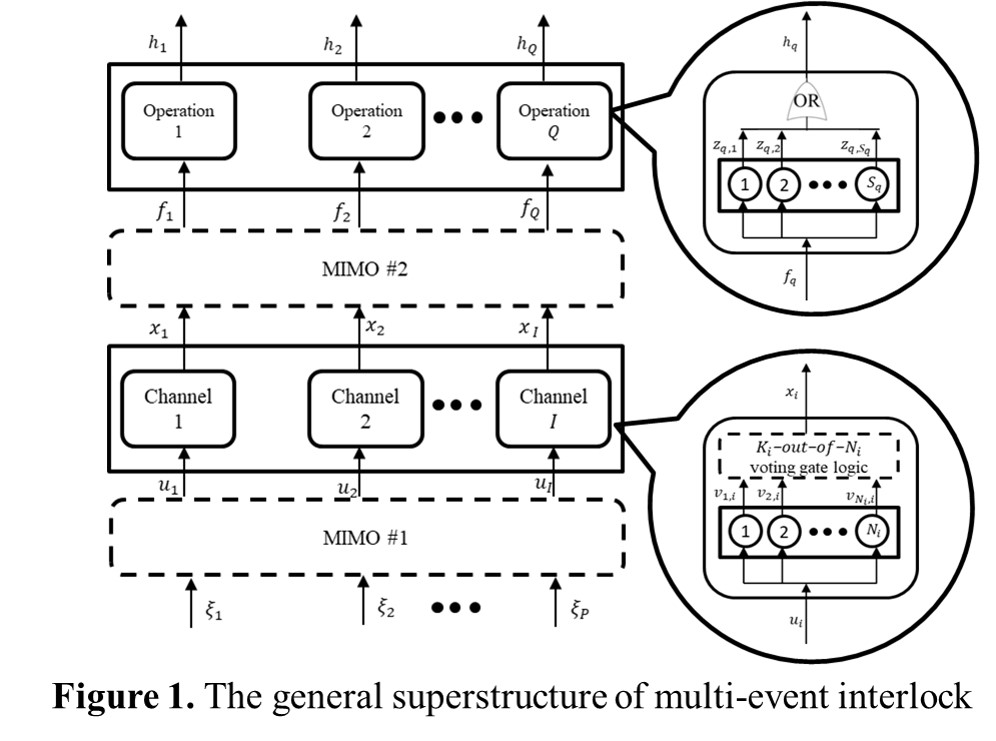

In order to mitigate the detrimental outcomes of process anomalies, modern chemical processes are generally equipped with safety interlocks. The conventional approach to design a protective system is usually aimed for prevention of the hazardous outcomes caused by a single abnormal event. However, since there may be more than one independent event producing multiple undesired effects within a realistic process, it is therefore necessary to develop a design methodology for the multi-input multi-output protective systems (see Figure 1). Specifically, the aim of this work is to construct a MINLP model to minimize the expected lifecycle loss of multi-event interlock and to generate the corresponding system configuration. In particular, the resulting specifications should include: (1) the number of online sensors in each alarm channel, (2) the voting-gate logic in each alarm channel, (3) the alarm logic, and (4) the number of shutdown elements for each shutdown operation. A simple example, i.e., the sump of a distillation column connected with a fired reboiler, is used in this paper to illustrate the aforementioned novel approach. From the optimization results, it can be observed that the proposed design strategy is indeed superior to the traditional one.

In recent years, cyberattacks against plant control systems can certainly be a growing threat. Conventional plant control system was closed system. However, it has been connected to external networks such as MES, ERP, and maintenance network. This change enables the various way of cyberattacks.

The cyberattacks against plant control system can directly harm physical space and cause accidents and disasters affecting human life.When the plant control system is under the cyberattacks, on-site plant operators should handle the situation suitably. However, plant operators are usually not aware of cybersecurity for plant operations. They don't have enough experiences and knowledges of cyberattacks.

What can they do under the cyberattacks? They must maintain safe operation and prevent the cyberattacks. The simple and effective way to prevent the cyberattacks is to cut off the attack path by disconnecting network cables.

Then it's important to minimize the negative impacts on plant operations such as safety, productivity and so on. For this purpose, if zoning and conduit of the network has been done in advance, operators can make a decision which zone should be cut off under the situation considering the network monitoring and impacts on plant operations.

To make a suitable decision requires enough useful information for plant operators.

In this paper, we developed a support tool for plant operator to make a decision against cyberattacks by combining two important information.

One is the vulnerability information of the components of the plant control system such as OS, middleware and applications of each OPC server, HMI, and so on. It is based on CVSS (Common Vulnerability Scoring System.)

The other is network monitoring information in the plant control network.

The developed GUI shows the options of the points to cutoff the network and supports plant operators to make a suitable decision against the cyberattacks.

In this study, a new methodology was developed to enhance the performance of control system in chemical process. An artificial neural network (ANN) was used to develop a model of the control system. For this, the process model was initially developed using Aspen HYSYS, and operation data was generated based on various operation scenarios. Then, the generated data was implemented to ANN for training, and results were tested and analyzed. Subsequently, the developed ANN model was integrated with an optimization algorithm so that manipulated variables were optimized in real time to maximize the operation efficiency. The developed model predictive control (MPC) system was tested based on various operation scenarios, and the results showed higher performance of the control system against conventional control methods.

Acknowledgment:

This work was supported by a research project entitled “Development of Integrated Interactive Model for Subsea and Topside System to Evaluate the Process Design of Offshore Platform” funded by the Ministry of Trade, Republic of Korea (Project no. N10060099).

Human interventions are common and indispensable during process operations. However, even in this era of big data where large volumes of process measurements are regularly collected and monitored, very little is known about human operators and their cognitive state while operating the plant. This absence of measurements prevents a scientific evaluation of the contribution of operators to the overall system performance. In this paper, we will discuss the need for an informatics-based approach to study the operational behaviors of plant personnel. Next, using the control room operator as an example, we will identify various technologies such as eye tracking and electroencephalography that can offer insights into the operators' cognitive state and their decision-making processes. Finally, we will explore the various ways by which such studies could help enhance the operator's performance – including context-aware interfaces, decision-support systems, and training protocols.

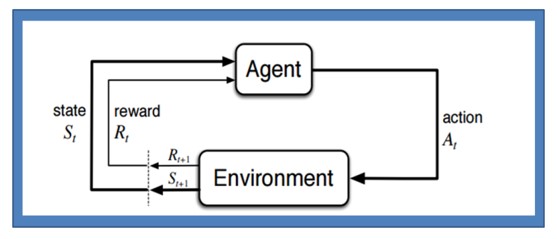

This paper provides a brief introduction to Reinforcement Learning (RL) technology, summarizes recent developments in this area, and discusses their potential implicationsfor the field of process systems engineering. This paper begins with a brief introduction to RL, a machine learning technology that allows an agent to learn, through trial and error, the best way to accomplish a task. We then highlight two new developments in RL that have led to the recent wave of applications and media interest. A comparison of the key features of RL vs. Model Predictive Control (MPC) and other traditional mathematical programming based methods is then presented in order to clarify their relative merits and shortcomings. This is followed by an assessment of areas that RL technology can potentially be used in process systems engineering applications. Particular focus is given on integrating planning and scheduling layers in multi-scale, multi-period, stochastic problems.

Gas hydrates are ice – like crystalline of natural gas and water. Several methods can be used to prevent hydrate formation such as solvent absorption, adsorption, and membrane process. This work studied modelling and optimization of natural gas dehydration using solvent absorption method. Triethylene glycol (TEG) was used as solvent to remove water from natural gas. Latin Hypercube sampling (LHS) was studied as sampling technique in term of its ability to represent the nonlinear model for optimization problem. The nonlinear model represents the relationship between the operating conditions and amount of produced dry natural gas. In the optimization, the total cost of the process was minimized. Both equipment and operating costs were considered in economic evaluation. The TEG absorption process was simulated using Aspen HYSYS version 8.8. The results show that LHS is able to accurately represent the nonlinear model between operating conditions and the total cost of the process for optimization problem. The optimal operating conditions of the process were obtained.

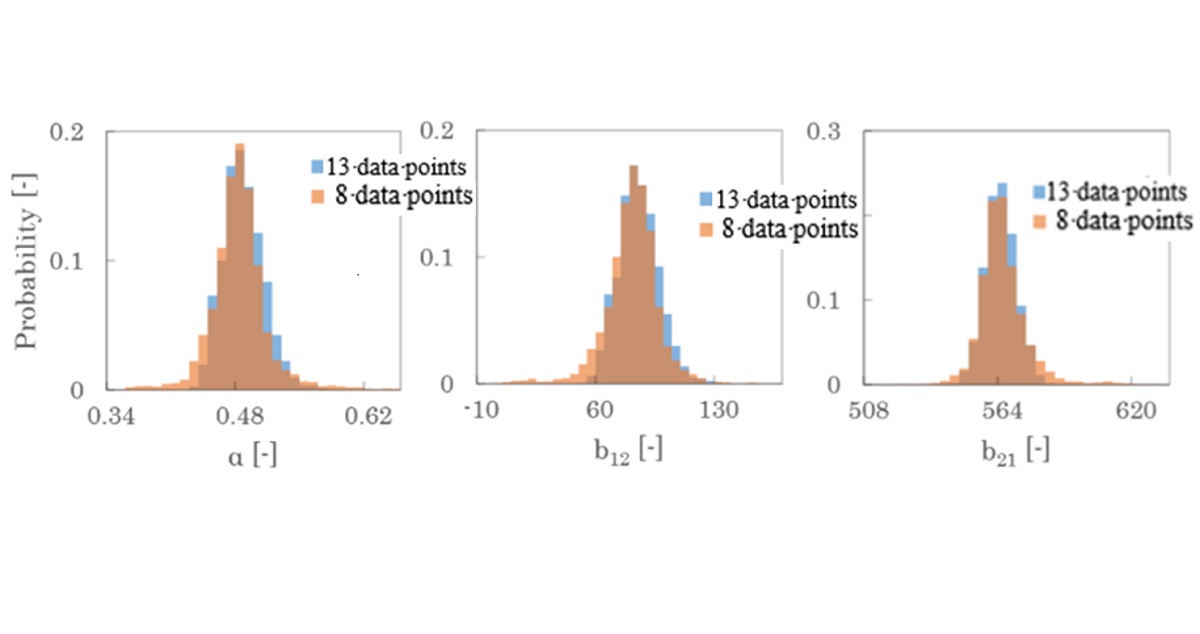

Model equations used in process design involve parameters, which can be estimated from experimental data. Estimating accuracy of model parameters is critical to ensure robustness of processes. The objective of this study is to quantify estimation accuracy using Bayesian inference techniques in parameter estimation. In this work, the Markov chain Monte Carlo (MCMC) method is used to obtain probability distribution. This algorithm repeats random sampling in parameter space and approximates the profile of posterior probability distributions by the density of sampled points. This method can handle complex nonlinear models. We demonstrate this method for estimating parameters in the Non-random two-liquid (NRTL) model for vapor-liquid equilibrium. The NRTL parameters are estimated by the MCMC method, and the influence of the number of experimental data on estimation accuracy is quantified. We compared two estimation cases, where 8 experimental data points and 13 experimental data points[1] are used, and the difference in the parameter accuracy is quantified. This estimation results for ethanol-water system are shown in Figure 1 for three parameters, a,b12, and b21 that describe intermolecular interactions in the NRTL model for the binary system. In addition, the proposed method is demonstrated for a multicomponent system that involve a larger number of components.

[1]Dortmund Data Bank Software & Separation Technology“VLE - Vapor Liquid Equilibria of Normal Boiling Substances”

http://www.ddbst.com/ddb-vle.html (2019.2.16)

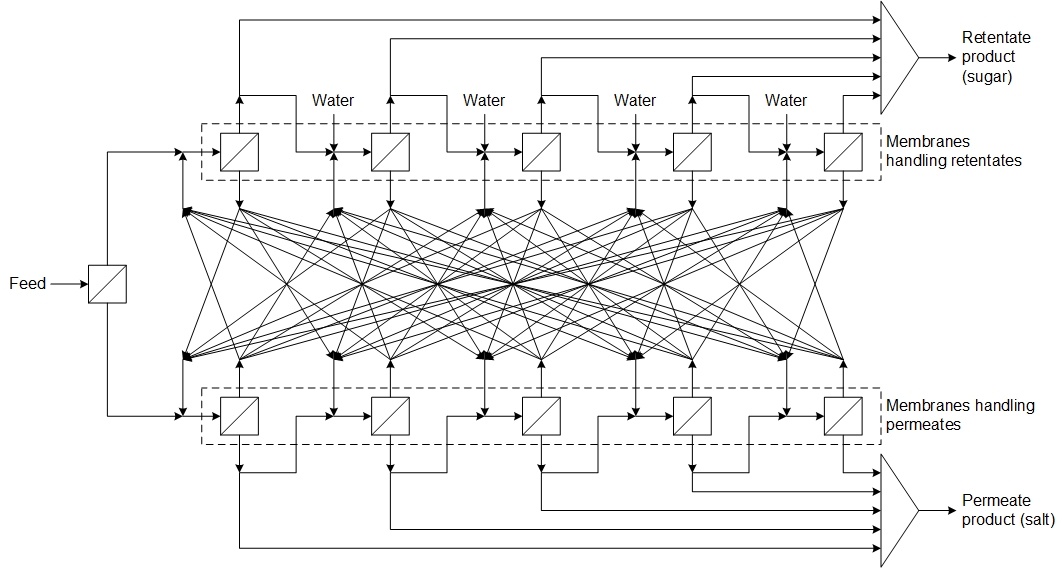

The growth in global economy has made water a limited resource in both quantity and quality. Freshwater resources available for our use are less than 1 % of the total water on the Earth. Reverse osmosis (RO) desalination technology became popular over the last decade due to its potential to produce freshwater from seawater. However, energy consumption in RO plants remain high and emission of concentrated brine from the plant has an impact on the environment.

This study aims to analyse the energy efficiency of various RO based desalination processes. Firstly, single-stage RO with pressure exchanger (PX) is analysed. Minimum normalized specific energy consumption (NSEC) is reduced from 5.00×105 J/m3 to 2.05×105 J/m3 by adding PX with 0.95 efficiency. Secondly, a simulation on multi-stage RO with PX is carried out. It is found that two-stage RO reduces minimum NSEC by 5.4 % compared with single-staged operation and increase recovery by 13.6 %. Even though multi-stage design may require higher capital cost and operating cost, optimised-stage RO design has certain advantages.

In the on-going research, membrane distillation (MD) and pressure-retarded osmosis (PRO) will be combined with multi-stage RO process to improve the overall energy efficiency. MD is a thermally driven membrane process that produces pure water, and PRO is an osmotically driven membrane process with external pressure, which is lower than the osmotic pressure difference, applied on the higher salinity solution side. The use of these processes are limited to when one has low-cost heat source, e.g. waste heat, and inexpensive lower salinity solution, e.g. wastewater. Since MD is less concentration-sensitive, it is expected to further concentrate the brine output, and highly concentrated brine is assumed to support PRO to generate greater energy and reduce minimum NSEC of the total process.

Heat ingress from atmosphere to LNG inside a tank due to temperature difference causes continuous evaporation of LNG that leads to increase of pressure and temperature. Therefore, it is necessary to continuously estimate the change in pressure and temperature inside the tank, using accurate numerical model in real time operation. For this, a new methodology was developed in this work to estimate total amounts of boiled off gas (BOG) and its composition and physical properties more accurately than conventional method, based on various operation conditions.

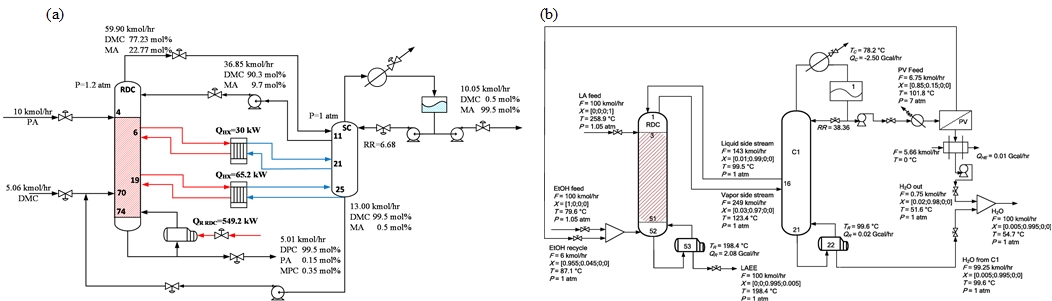

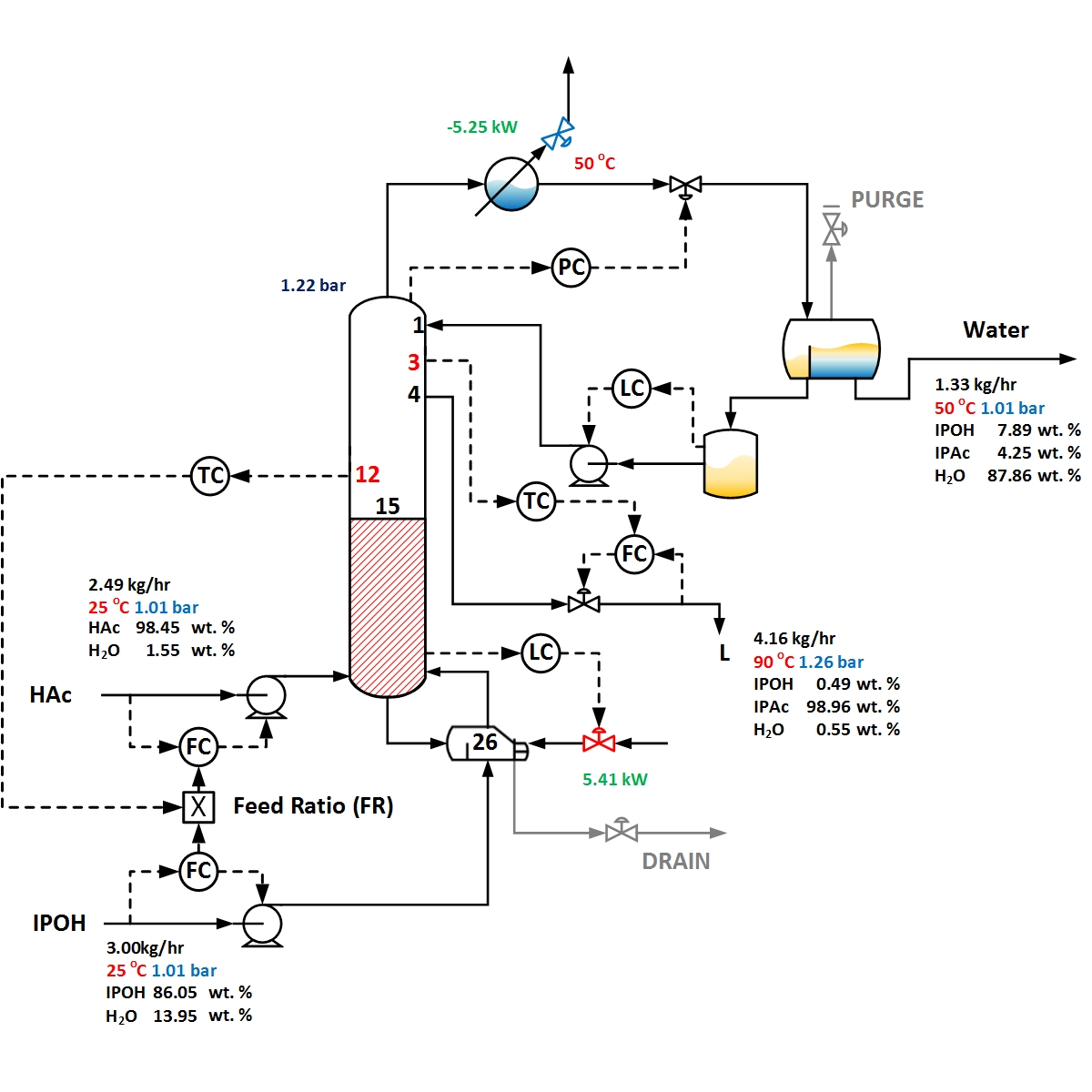

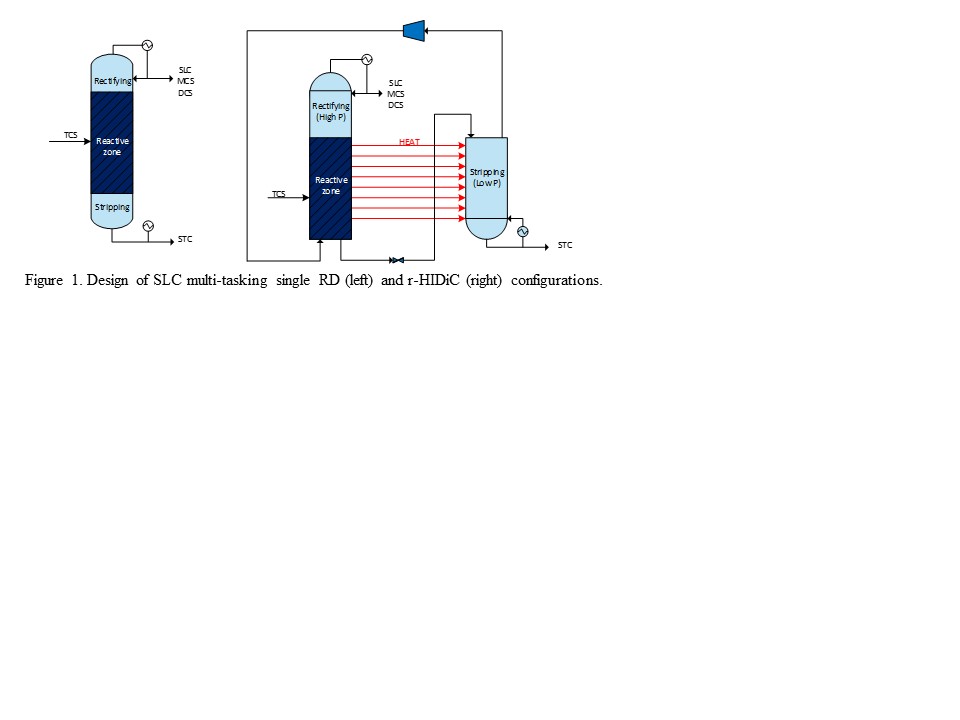

This work focuses on the efficient design with various heat integration methods and membrane arrangement for reactive distillation (RD) processes in order to enhance the energy saving. It is well-known that thermally-coupled, multi-effect, and external heat-integration approaches can reduce energy consumption in comparison with conventional distillation systems. Therefore, in this work, two processes will be discovered to demonstrate that hybrid configuration via various heat-integration and unit combination (such as pervaporation, PV) can provide further energy reduction compared to the conventional RD configuration. The first process is the diphenyl carbonate (DPC) production process that will be chosen to show a synergetic effect when thermally-coupling and external heat-integration arrangement are combined in the same reactive distillation sequence. The results show that in the DPC production process, the hybrid heat configuration can reduce energy consumption by 47% in comparison with the conventional RD configuration. On the other hand, another process is the ethyl levulinate (LAEE) production process that will be investigated to show a synergetic effect when thermal intensification is implemented in the hybrid RD-PV configuration in the LAEE production process. The hybrid heat-integrated configuration assisted with PV arrangement through excess ethanol (EtOH) design can save around 73% of energy consumption compared to the conventional LAEE RD configuration. Figure 1 shows the flowsheet of hybrid configurations for DPC and LAEE production processes, respectively. Finally, several control schemes of the DPC process would be investigated and tested the control performance based on this highly integrated configuration. Results show that the best control scheme has good disturbance rejection for throughput and feed composition disturbances.

For the chemical reactor systems using phase transfer catalyst (PTC), the third liquid phase that is insoluble in both aqueous solution and organic solvent is separated by regulating concentrations of salt and catalyst. Previously, our research group has demonstrated that application of alternate current (AC) voltage in formation of a layer of the third phase could increase a yield of the product for the phase-separable reactor using polyethylene glycol (PEG) as PTC. The AC application is considered to change the property of the third phase, which influences acceleration of mass transfer of product between two phases. In order to intensify the phase-separable reactor with third phase, it is necessary to optimize flow characteristics in the organic phase and the third phase to maximize the mass flux stably.

In the present study, influence of flow characteristics in the third liquid phase to dynamic behavior of batch-type phase-separable reactor is investigated for synthesis of phenyl benzoate by using PEG. Time variation of conversion of benzoyl chloride and apparent selectivity of benzoyl chloride to phenyl benzoate is analyzed by the rate based model. When experiments were carried out by using different types of impeller, higher value of the apparent selectivity was seen in a case of using the six-blade flat paddle impeller (6FPI). A clear characteristic of vertical circulation flow in the third phase was also observed by the flow visualization experiments, especially when 6FPI and two types of reverse pitched blade impeller were used. Hence, it is made clear experimentally and numerically that the vertical circulation flow in the third phase is an influencing factor to enhance the overall reaction efficiency and that it could be controlled by type of impeller, its rotational speed and concentration of potassium hydroxide for preparation of the third phase.

Pure, isomorphic, round, and free-flowing dimethyl fumarate granules in a size range of 250–2000 μm were successfully produced directly from esterification through the three-in-one intensified process of three distinctive steps of reaction, crystallization, and spherical agglomeration (SA) in a 0.5 L sized jacketed glass stirred tank. Dimethyl fumarate was prepared by sulfuric acid catalyzed esterification of fumaric acid with methanol. The reaction temperature was below the maximum allowable limit of 65 °C as determined by reaction kinetics to avoid the runaway situation. The dissolution rate of primary crystals of dimethyl fumarate was inversely proportional to the particle size which was strongly affected by the antisolvent addition and temperature cooling schemes during crystallization. However, the dissolution rate of the round granules was mainly dependent on the exterior dimension of the granules and not so much on the primary crystal size inside the granules. The mechanical properties such as density, porosity Carr's index, friability, and fracture force of round dimethyl fumarate granules generated in (1) three-in-one processes with the final temperatures at either 5 or 25 °C (Three-in-one I and II) and (2) SA of dimethyl fumarate, which was done separately and disconnected from the train of reaction and crystallization process at either 5 or 25 °C (SA I and II), were thoroughly studied and compared. The concept of scale-up for Three-in-one I and II was also verified in a 10 L sized jacketed glass stirred tank. Powder manufacturability such as flowability, blend uniformity, and compressibility had been substantially enhanced by spherical agglomeration. The added values of free-flowing and easy-to-pack properties to dimethyl fumarate in addition to its original intrinsic slip planes in the crystal lattice would make direct compaction into tablets feasible.

The Natuna gas field, located in the Natuna Sea, was discovered in 1973, is one of the largest natural gas reserves in Indonesia with estimated natural gas reserves of 222 TCF. However, until now, the use of Natuna gas is still hampered because of the very high CO2 content reaching 71%, while the methane content is around 28%. The dry reforming of methane (DRM) process is one of the potential ways to be applied in solving these problems to convert CH4 and CO2 become a synthesis gas containing CO and H2 as a raw material that can be applied to manufacture as intermediate products or end products in the petrochemical industry such as acetic acid.

The conversion of carbon dioxide and methane to acetic acid is carried out in three stages. In the first step, carbon dioxide and methane are reformed to produce the synthesis gas at temperature of 800 °C and pressure of 1 bar. In the second step, the synthesis gas is converted to produce methanol at temperature of 150 °C and high pressure of 50 bar. In the third step, the acetic acid is produced by reacting methanol and CO in the methanol carbonylation process. The modeling and simulation of the acetic acid production were conducted by using Aspen Hysys v.10, considering mass and heat balances. The cubic equation of state was applied for reforming and methanol processes. In order to produce 71 MTPD of the acetic acid, the feed flow rates of CH4 and CO2 are 190 MTPD and 520 MTPD, respectively. The total energy required is 46.3 MMBtu per ton of acetic acid. The acetic acid has a purity of 99.4% with a concentration of 500 ppm methanol, and moisture content of 5,700 ppm.

Keywords: Dry reforming of methane, Modelling, Simulation, Acetic Acid

The solid particles or liquid droplets entrained by multi-phase flow in process plants can cause erosion, which may result in leakage of piping system or equipment. Hence, it is essential to evaluate erosion rate for determining design margin and taking counter-measures. To date, many models have been proposed for prediction of erosion induced by particles and droplets, but there is large difference in their prediction accuracy. The present study aims to validate CFD-based prediction accuracy of major erosion models using the published experimental data, for engineering applications.

Among many erosion models proposed to predict particle-induced erosion rate, the models of Finnie, Tabakoff & Grant, E/CRC, Oka and DNV are usually applied for evaluating erosion. Experimental data in literature were used to investigate CFD prediction accuracy of the five erosion models. CFD simulations were conducted for different flow velocities and piping geometries containing elbows and reducer. CFD results show that Finnie model under-estimates the erosion rates significantly, and other four models over-predict the erosion rates for all the cases. Among them, the erosion rates predicted by Tabakoff & Grant model are closest to the experimental results with moderate and acceptable conservativeness. Therefore, Tabakoff & Grant model is applicable to predict particle-induced erosion rate in engineering applications.

Also, CFD simulations were performed to validate CFD prediction of liquid droplet induced erosion rate, using the data of water impingement experiments conducted by Isomoto et al.. The investigated erosion models include the models of Haugen, Isomoto and DNV. CFD results show that all the three models over-predict the erosion rates. Among them, the erosion rates predicted by Haugen model for all the water impingement velocities are closest to the experimental results with moderate and acceptable conservativeness. Hence, Haugen model is applicable to predict droplet-induced erosion rate in engineering applications.

Mathematical model is powerful conceptual framework to elucidate underlying biological mechanisms and guide further experiments. Researchers usually use equation – based model which is deterministic and assumes a homogeneous population, therefore, is not appropriate for systems characterized by a high degree of localization, distribution and dominated by discrete decisions. In this study, we developed a cell – based model that is more suitable for understanding the heterogeneity of stem cell population which is a challenge in bioprocessing for application in regenerative medicine.

Previously, different positions of region with cells deviated from the undifferentiated state of hiPSCs in cultures with SNL and MEF feeder cells were observed (Kim et al., 2014). In culture with SNL feeder cells, the deviation from the undifferentiated state occurred at the central region of the colony. In contrast, in culture with MEF feeder cells, the deviation from the undifferentiated state occurred at the peripheral region of the colony. Later, it was suggested that anomalous low and high migration rate at the central and peripheral region, respectively, led to deviation from the undifferentiated state in hiPSC colonies (Shuzui et al., 2019). Based on this hypothesis, we have developed a model in order to understand more deeply about these phenomena.

Our model described several cell behaviors including cell division, contact inhibition, cell migration, cell – cell interaction, cell – substrate interaction, and cell deviation. Using the model to understand the spatial heterogeneity of cell movement rate in colony, we explored that cell division was main factor that led to higher movement rate at the peripheral region than that at the central region of colony. The simulation results indicated that the model was able to reproduce the deviation from the undifferentiated state similar to the in vitro observation. The result also partially confirmed the in vitro hypothesis that stated anomalous cell migration triggered the deviation from the undifferentiated state.

The trade of LNG is usually regulated by contracts between suppliers and buyers which are generally 20-25 years long and provide security to buyers; however, their rigid take-or-pay clause transfers risk of surplus volume to the buyer. New suppliers have emerged due to liberalisation in local markets. The concomitant emergence of new small-scale customers has led to a competitive LNG market characterized by high demand variability. Consequently, there is an increased need felt by regasification terminals to purchase LNG through spot (i.e., short term) contracts. In this study, we seek to quantify the relative benefits of shifting from long term contracts to purchasing LNG on the spot market.

In this paper, we consider procurement of LNG by a buyer with its own demand variability and a set of producers each with its own selling cost. This collective demand may be met through either a long-term contract or through spot market purchase. Our goal is to identify critical conditions under which spot purchases are superior to long-term contracts and vice versa. Towards this, we develop MILP models to assess the minimal procurement cost i.e. sum of transportation cost and LNG price , taking into the account the dynamics of producers, shipping, and buyers. The set of producers is modelled with each producer having specific production profile along with storage tank and berth capacities and selling price associated with it. Similarly, a buyer is modelled with its varying demand over the planning horizon. The voyage of the LNG carrier is modelled as being dependent solely based on demand at consumption sites. Minimal procurement costs based on long term and short term contracts are calculated using these models and compared in this study. The results of the paper can be used to develop critical insights about the benefits of different LNG procurement contracts.

The nitrogen expander and single mixed refrigerant (SMR) liquefaction processes are recognized as the most favorable options to produce liquefied natural gas (LNG) at offshore sites. These processes have a simple and compact design that make them capital costs efficient. Nevertheless, huge operating costs, mainly due to the lower energy efficiency is an ongoing issue, so far. This lower energy efficiency is primarily due to the entropy generation that is dependent on the temperature gradients inside the main LNG cryogenic heat exchanger. The temperature gradients inside the cryogenic exchanger are strongly dependent on the refrigerant flow rates and pressures of refrigeration cycles. However, there are highly non-linear interactions between process decision variables and constrained overall energy consumption. Therefore, optimization of the LNG processes is still a challenging task in order to find optimal values of decision variables corresponding to minimize energy consumption. In this context, this study examines algorithm-specific parameter-less optimization methodology naming as “Jaya” to improve the energy efficiency of LNG processes for offshore applications. It was found that using the Jaya algorithm, the energy efficiency of SMR process and nitrogen dual expander liquefaction process can be enhanced up to 14.3% and 11.63%, respectively, as compared to their respective bases cases. This research was supported by the Basic Science Research Program Foundation of Korea (NRF) funded by the Ministry of Education (2018R1A2B6001566) and the Priority Research Centers Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (2014R1A6A1031189).

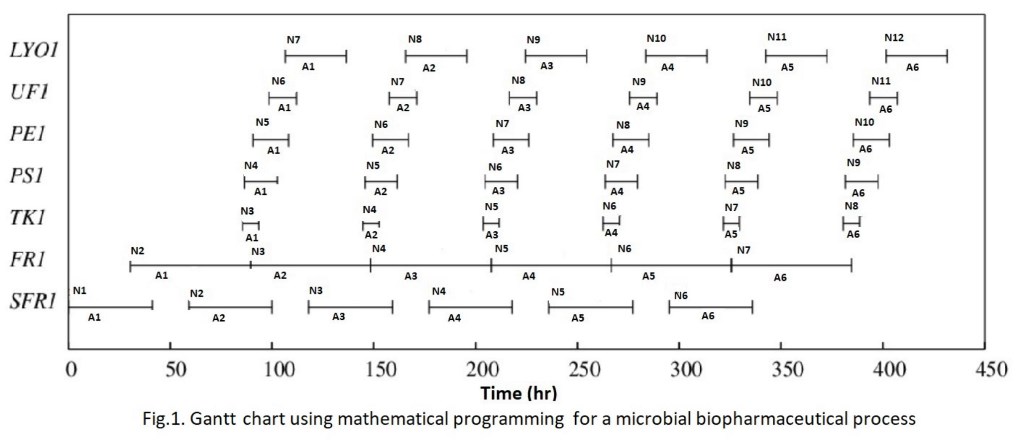

Biopharmaceuticals are therapeutic drugs driven from biological means such as monoclonal antibodies, engineered protein hormones, cytokines and vaccines. As the biopharmaceutical sector has matured, pressure is increasing for design of flexible process configurations that can cope up process variability associated with the manufacturing of biopharmaceuticals. Due to biological nature of the reactants, it is challenging to maintain required purity of final product and other quality control measures. Under the risk of high clinical failure linked to drug development coupled with greater process variability, design of decision support system for multi-product facilities in biopharma remains a challenge. A lot of work has been done towards planning, scheduling and optimization in process system engineering (PSE), and recently increasing efforts are being made to understand process design and optimization in biopharmaceutical industry. Therefore, the purpose of present study is to conduct research and develop novel models to fill the gap between computer aided process design tools and mathematical optimization and scheduling. In the present work an attempt is made to compare process scheduling using mathematical programming versus conventional software tools such as SchedulePro®[1] to explore techno-economic benefits, if any. An example adopted from SchedulePro® library, on a microbial biopharmaceutical process, has been modelled and validated using mathematical programming based approaches [2]. The Gantt chart is given in Fig.1. Another case study from a process for Lethal Toxin Neutralizing Factor (LTNF) production from E.coli, for the treatment of snakebite, has also been considered for validation of the proposed model.

References

[1] SchedulePro® software, Intelligen, Inc., USA: http://intelligen.com/schedulepro_overview.html

[2] M.A. Shaik; A. Dhakre; N. Patil; A.S. Rathore, Capacity optimization and scheduling of a multiproduct manufacturing facility for biotech. therapeutic products, Biotechnol. Prog., vol. 30, no. 5, pp. 1221-1230, 2014.

Recently, lignocellulosic biomass have emerged as a renewable energy source due to reduce environmental pollution with carbon-neutral fuel. However the storage problem due to weak water-resistance and which has a disadvantage of low heating value compared to fossil fuels. In order to overcome this problem pretreatment process (crushing, crushing, compression molding, carbonization, torrefaction) are required and in this study torrefaction was selected. Removal of moisture content through the torrefaction process increases energy per unit weight, which has advantages for storage, transportation and finally improves fuel properties. But if the process time was too short, heating value can't be increased, on the other hands, the useful heating value can be decreased caused by long processing time. So proper conditions were needed. In this study, the development of heat value one-dimensional prediction model for optimization of the torrefaction process was performed. A comparative analysis was conducted with the results of torrefaction experiments (200, 230, 270 °C, 20, 30, 40 min). Steuer's heating value estimation equation (r2 = 0.937, RMSE = 11.664) was selected as the most suitable empirical formula. Finally, derive the optimal torrefaction condition, according to the process conditions through case study.

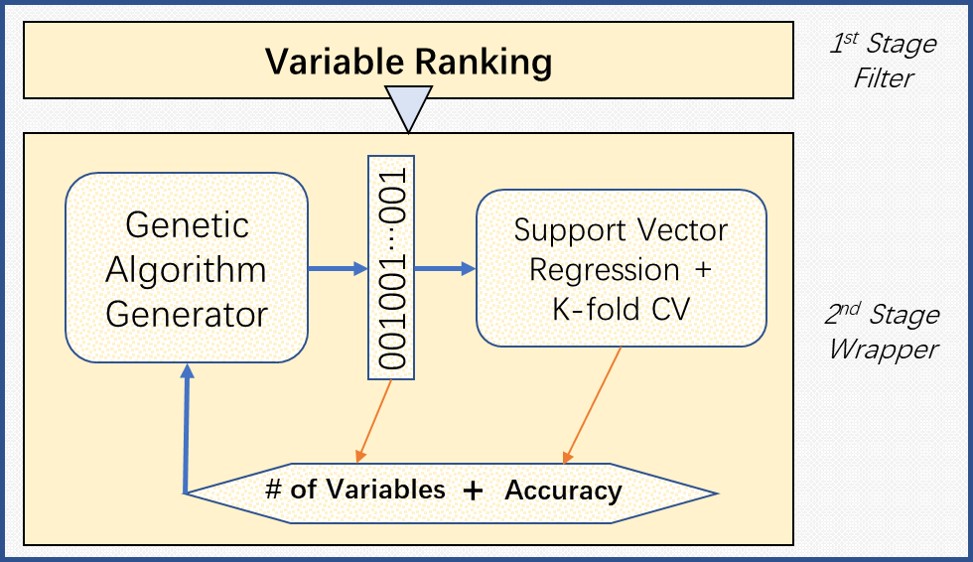

Fluid catalytic cracking (FCC) is one of the most critical thermal conversion processes in refineries, and the modeling of FCC process is a useful tool to investigate, simulate, and optimize this complex industrial process. The selection of key variables in FCC process modeling mainly relies on the design handbooks or operating experience. However, the causal relationship between modeling variables and target variables may vary over time owing to the changing operating conditions, flexible feedstocks and some other issues occurred under uncertainty. It is essential to analyze the key variables that have an impact on the target variable in real time. A filter-wrapper hybrid feature selection strategy is proposed in our work to find out the key variables in an FCC process with respect to certain target variables. In the filter stage, the irrelevant variables are screened out by the ReliefF algorithm from the dataset that contains 445 candidate variables. Next, in the wrapper stage, the genetic algorithm (GA) is used to generate a large number of subsets, and the support vector regression (SVR) is carried out to test the performance of different subsets with the multi-objective considering both the prediction error and the magnitude of the subset. For the demonstration purpose, the process variable like the pressure of reactor and product indices like gasoline yield, diesel yield are chosen as the targets. The results indicate that the hybrid strategy can reduce the magnitude of subset over 92% within 30 mins by which it suggests the potential for implementation in industrial scenarios.

Salts are generally prepared by acid–base reaction in relatively large volumes of organic solvents, followed by crystallization. In this study, the potential for preparing a pharmaceutical salt between haloperidol and maleic acid by a novel solvent-free method using a twin-screw melt extruder was investigated. The pH–solubility relationship between haloperidol and maleic acid in aqueous medium was first determined, which demonstrated that 1:1 salt formation between them was feasible(pHmax 4.8; salt solubility 4.7 mg/mL). Extrusion of a 1:1 mixture of haloperidol and maleic acid at the extruder barrel temperature of 60 °C resulted in the formation of a highly crystalline salt. The effects of operating temperature and screw configuration on salt formation were also investigated, and those two were identified as key processing parameters. Salts were also prepared by solution crystallization from ethyl acetate, liquid-assisted grinding, and heat-assisted grinding and compared with those obtained by melt extrusion by using DSC, PXRD, TGA, and optical microscopy. While similar salts were obtained by all methods, both melt extrusion and solution crystallization yielded highly crystalline materials with identical enthalpies of melting. During the pH-solubility study, a salt hydrate form was also identified, which, upon heating, converted to anhydrate similar to that obtained by other methods. There were previous reports of the formation of cocrystals, but not salts, by melt extrusion. 1H NMR and single-crystal X-ray diffraction confirmed that a salt was indeed formed in the present study. The haloperidol–maleic acid salt obtained was nonhygroscopic in the moisture sorption study and converted to the hydrate form only upon mixing with water. Thus, we are reporting for the first time a relatively simple and solvent-free twin-screw melt extrusion method for the preparation of a pharmaceutical salt that provides material comparable to that obtained by solution crystallization and is amenable to continuous manufacturing and easy scale up.

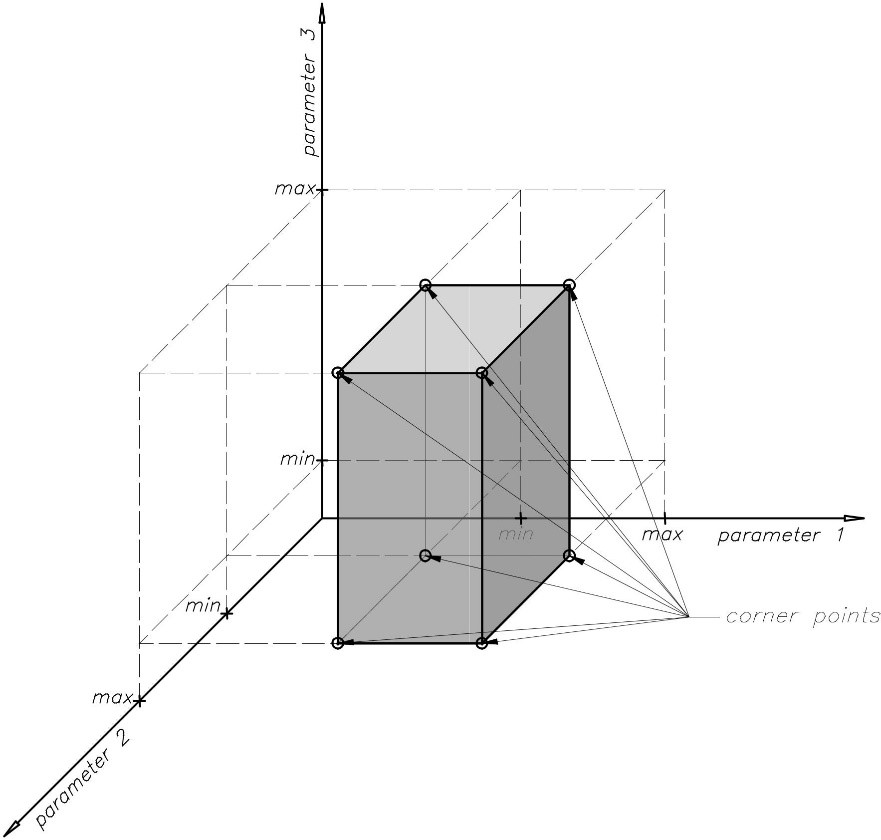

The United Nation 2030 Agenda for Sustainable Development has dedicated Goal 6 (Clean Water and Sanitation) for better water management, with 8 targets and 11 indicators identified for progress monitoring. Some of the common strategies to achieve better water management include the “4Rs” techniques – reduction of pollution at source, removal of contaminant from wastewater, reuse of wastewater, and recovery of by-products. Within the process system engineering community, some systematic techniques have been developed since the past 3 decades, aiming to design sustainable chemical processes. One of the most promising techniques is arguably process integration, with pinch analysis and mathematical programming tools developed specifically to handle water management issues. However, most developed techniques were meant for water network systems at steady state condition, i.e. without changes in process condition. This paper presents a new design methodology based on a recent developed corner point method, for the synthesis of flexible water networks system. In this case, the flowrate and purity of water sources and sinks may change periodically over time. Hence, the synthesised water network should be able to absorb the changes of sources and requirements of sinks. Literature example is used to illustrate the proposed methodology.

Monoclonal antibodies (mAbs) are an important fraction of the growing biopharmaceutical market. The rapidly expanding demand for mAbs is making it necessary to optimize the production processes to reduce manufacturing costs and time. Generally, production in continuous mode can improve process efficiency. Continuous manufacturing has been previously studied for individual process units in the upstream, e.g., perfusion cultivation, and downstream, e.g., chromatography separation using simulated moving bed. Integrated continuous production modes have been demonstrated experimentally, and stability in end-to-end operation have been presented. This work aims to explore the available design options and map favorable production modes at different production scales and sizes. The work also aims to explore the impacts of recent developments in cell design and advancement of cell tolerance on the process performance and resulting favorable operating modes.

Production data has been obtained from a pilot scale research facility for the production of mAbs from Chinese hamster ovary (CHO) cells. Different models for the large-scale cultivation and chromatography separation have been fitted against the production data, and the appropriate models were selected. After confirming the model performance using the production data, we performed economic process assessment. Different scenarios were considered regarding operation modes, i.e., batch or continuous, and equipment, i.e., single-use or multi-use, for the individual units as well as the end-to-end process.

The simulation results indicated that the favorable scenarios varied in the upstream and downstream depending on the production scale and the CHO cell performance. The superiority of the fully integrated continuous process has been seen regarding productivity. Sensitivity analysis revealed the relevance of the cell performance. This finding will open up a new research opportunity for PSE to tackle simultaneous design of cells, products, and processes.

It is very important to consider all the units and operating parameters of the individual units in the gas-oil-separation-plant (GOSP) optimization. However, the differences in the governing principle of these units make it extremely difficult to optimize the GOSP operation. Also in real operation, optimum parameters for the individual units are difficult to implement and establishing the optimum control condition for the individual unit will be difficult to achieve as all the units are interlinked to each other.

The current practice is to set the parameters determined at the design stage by simple laboratory test without accounting for the changes in temperature (fixed ambient temperature) and pressure on the crude oil properties as reservoir pressure. This results in loss of the quantity of the crude oil at the stock-tank causing a loss in the profitability of the plant.

The objective of this project is to develop a generic integrated framework for maximizing the oil recovery by optimizing the gas-oil-separation-plant (GOSP) parameters. A typical Saudi Aramco GOSP plant consists of multi-stage separators, heater-treater, and stabilization units along with the stock tank to mimic the oil train. Amongst the various parameters, only the controllable parameters such as pressure, and temperature were considered as optimization parameters to achieve the maximum oil recovery.

The GOSP plant model was developed using “Omegaland” software. To evaluate the effect of the various parameters many runs were initiated for generating the data. Based on the generated data a model was developed using artificial intelligence techniques. The model served as a tool for optimizing the controllable parameter to maximize the oil recovery which is the ultimate goal of the GOSP.

The generic integrated framework model serves as a guide to determine the optimum operating condition for maximizing the oil recovery resulting a significant monetary gain by improving GOSPs efficiency.

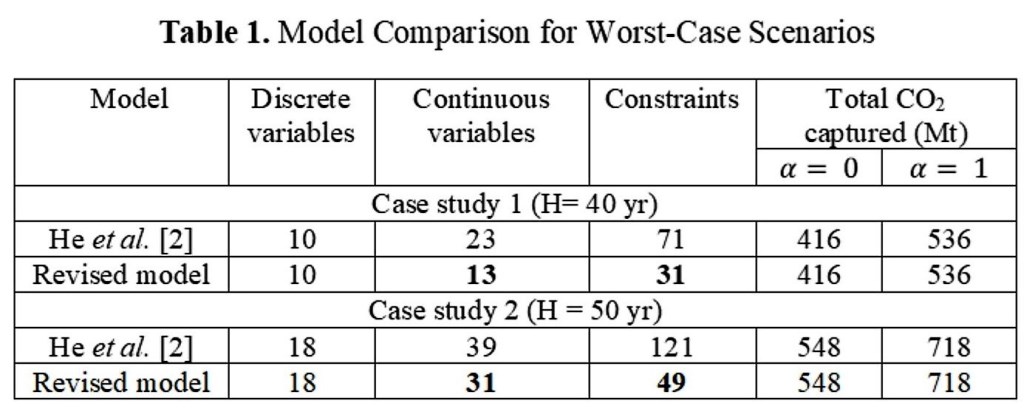

Carbon Capture and Storage (CCS) is one of the options for mitigating global warming by reducing carbon dioxide emissions, which involves source separation of CO2 from gaseous effluent streams, transportation of CO2 through suitable storage, and storing the same underground or geological reservoirs for a permanent period. Since CCS is a resource intensive process with multiple uncertainties, it must be modeled and validated under several circumstances for practical use. This study focuses on worst-case analysis based on continuous-time formulation for CCS systems under uncertainty. In the model, end time of operating life of CO2 sources, maximum storage capacity for different sinks, and compensatory power carbon footprint are considered as uncertain parameters. Recent work of Shaik and Kumar [1] has been extended to consider these uncertainties in the model. Two case studies are solved to validate the model over different time periods (40 yr and 50 yr). Similar to He et al. [2] the revised model [1] is also solved for different risk levels (α= 0 and α= 1) which demonstrates the modification and simplification of the model [1] compared to He et al. [2]. As shown in Table 1, the same optimal solutions are obtained compared to the literature for the minimum and maximum amounts of total CO2 captured. When compared to the worst-case solutions, the nominal solutions (without uncertainty) pertain to higher risk or perhaps infeasible zones.

References

[1] M. A. Shaik; A. Kumar, Simplified model for source–sink matching in carbon capture and storage systems, Ind. Eng. Chem. Res., vol. 57, no. 9, pp. 3441–3442, 2018.

[2] Y.-J. He; Y. Zhang; Z.-F. Ma; N. V. Sahinidis; R. R. Tan; D. C. Y. Foo, Optimal source–sink matching in carbon capture and storage systems under uncertainty, Ind. Eng. Chem. Res., vol. 53, no. 2, pp. 778–785, 2014.

The grand challenges facing human societies are all closely interconnected with the sustainable provision and consumption of energy, water and material resources for constantly growing and developing populations, as well as the subsequent processing and management of wastes and pollutions. In this work, a series of decision-support platforms are developed combining comprehensive database, agent-based simulation and resource technology network optimization, to deal with the challenges of energy, water, food, and waste systems through a nexus manner for a circular economy. As key components of smart city development strategies, the data-driven systematic tools and models can simulate different constraints and targets seamlessly with respect to environmental, economic and social costs and benefits in a bottom-up approach to inform infrastructure planning, investment and decision making for city regions globally.

From macro-level down to detailed systems, smart energy technologies, operational strategies and market design will be specially discussed. To tackle the increasing global energy demand the climate change problem, the integration of distributed resources, renewable energy, and negative emission technologies provides promising solutions for sustainable and resilient energy future. Compared with traditional design and operation of energy systems, the novel process that combines real-time prediction and optimization, model predictive control, demand-responsive scheme and other game-theoretic decentralized market strategies will improve energy systems performance from both the supply and demand side. The state-of-the-art modelling, machine learning, and blockchain technologies will be introduced and applied to the smart energy systems for overall economic and environmental benefits.

Though a global optimization procedure using a randomized algorithm and a commercial process simulator is easier to implement for complex design problems, a dominant problem is a heavy computation load. As the process simulation is repeatedly executed to calculate the objective function, it is inevitable to spend long computation time to derive the optimal solution, especially when the target process has recycle flows that need convergence calculation. Thus, the reduction of the number of iterations is crucial for such an optimization procedure.

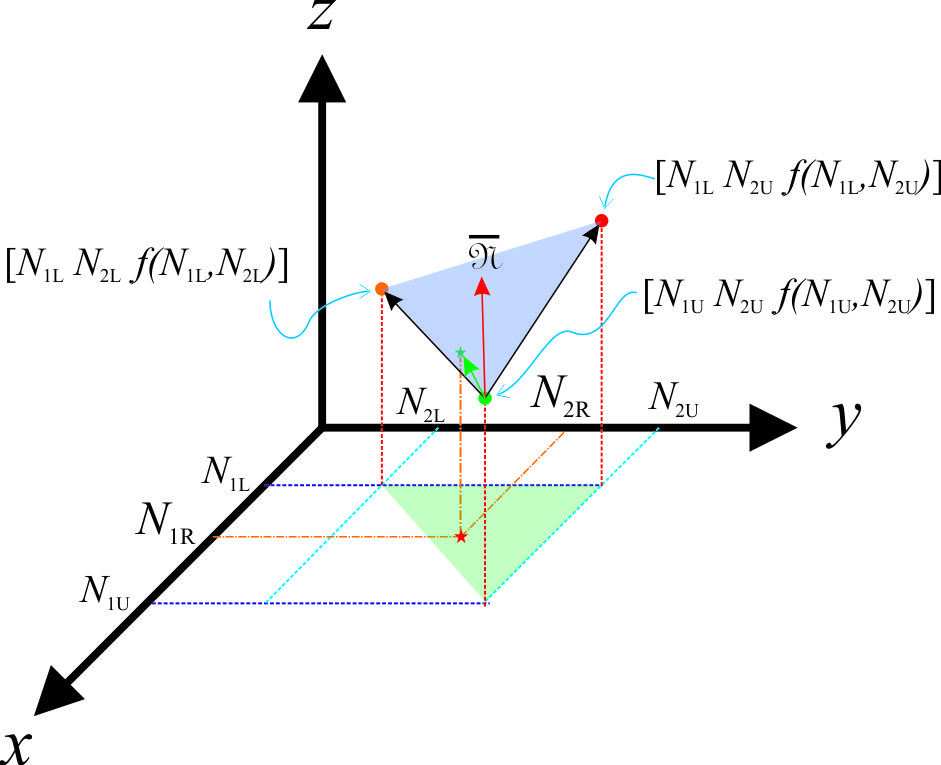

Though some design variables such as the number of stages of a distillation column can take only an integer value, in most cases optimization algorithms derive continuous values as the next promising search point. Thus, the process simulation must be executed by rounding off them to integer values. This rounding step affects the selection of the next search point in the optimization procedure. In this work, an estimation procedure of the objective function having integer design variables is proposed. In the proposed procedure, the values of the objective function at the nodes of hyper-triangle that includes the suggested next search point are used to estimate the objective function at the suggested next search point. Figure 1 shows a two-dimensional case. In this example, the objective function at point (NR1, NR2), f(NR1, NR2) is estimated by using the objective function values at three integer points, (N1L, N2L), (N1L, N2U) and (N1U, N2U).

The proposed procedure is applied to the optimal design problem of a distillation process. Simulated annealing is used as the randomized algorithm. The results showed a significant reduction of the number of iterations, and the results were the same or better than those obtained by the traditional optimization implementation. In the presentation, the effect of normalization of design variables is also explained.

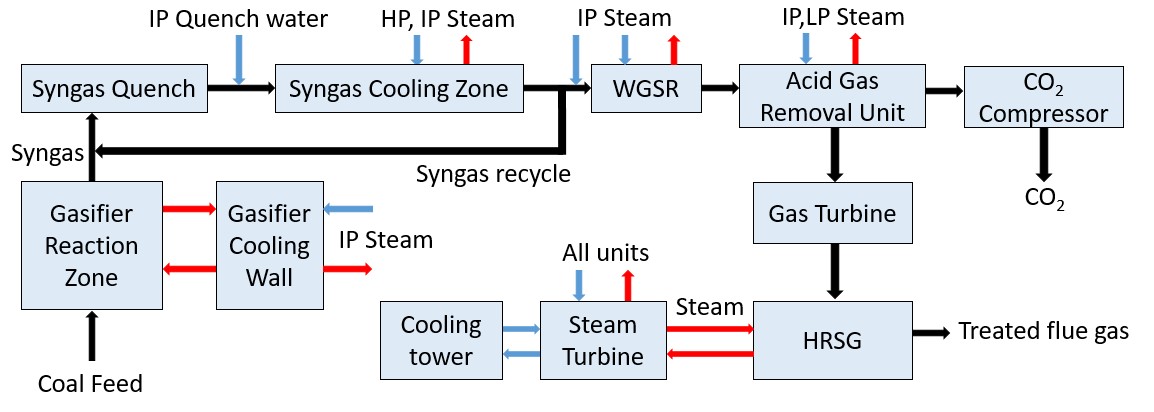

Although the efficiency and energy contribution of renewable energy is rapidly growing, coal is still an important fuel to meet the global energy demand. The integrated gasification combined cycle (IGCC) has high efficiency and minimum cost penalties, compared with conventional coal power plants. Since the operational change of one unit in the integrated IGCC process can influence the other units, techno-economic analysis for performance and cost assessment of the integrated overall process is essential. Furthermore, the techno-economic analysis of IGCC with carbon capture process (CCP) for various types of coal is important because about 53% of global coal reserves are of low rank with various qualities.

In this study, a techno-economic analysis of the entire syngas process including carbon capture unit was conducted for a 500 MW-class IGCC with Shell gasifier using various types of coal. The process simulation of the integrated syngas purification process was performed using Aspen Plus. The performance of Shell gasifier using various coals was simulated using a gPROMS software package.

Firstly, the amount of oxygen and intermediate pressure steam fed to the gasifier were optimized to maximize the cold gas efficiency of Shell gasifier. The parametric analysis of the oxygen to coal feed rate ratio was conducted over the range 0.65~0.85, and the steam to coal feed rate ratio was conducted over the range 0~0.2. Then, the carbon capture efficiency ranged from 80~95% was optimized to minimize the energy consumption of the syngas purification process with a CCP. Finally, to using optimized conditions of gasifier and CCP, a comparative performance evaluation of four difference coals was conducted for the overall IGCC process. And the estimated cost of electricity (COE) considering CO2 T&S cost and coal price was compared among the IGCC processes using four coals (two bituminous coals and two sub-bituminous coals).

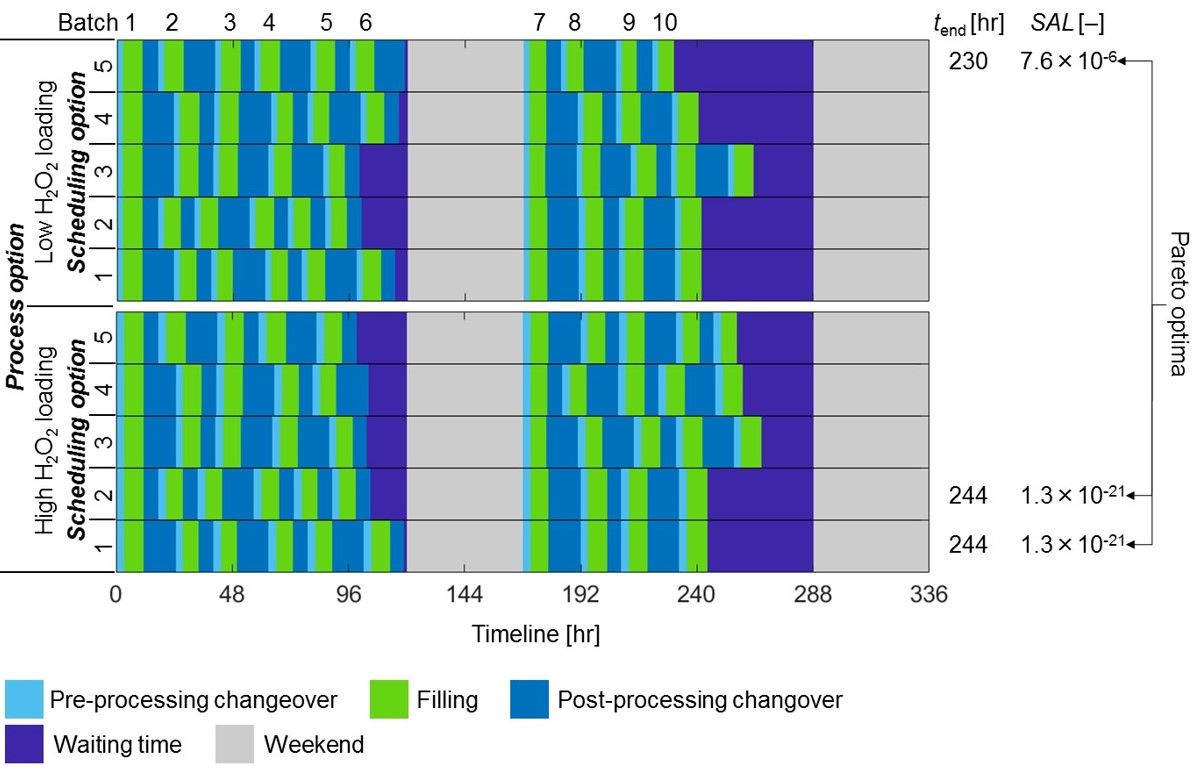

Sterile drug products, represented by injectables of biopharmaceuticals, are manufactured in batch processes by filling drug solution into vials/syringes. Decontamination is responsible for establishing the proper environment for filling, which is typically performed by injecting hydrogen peroxide (H2O2) into the environment such as isolators or clean rooms. Manufacturing of sterile drug products requires changeover processes such as decontamination and cleaning which are time intensive. However, a model can deal with scheduling considering the process design of decontamination is not established.

In this work, we present a model for designing simultaneously decontamination processes and scheduling of filling. The model was developed by incorporating two sub-models: decontamination process model and scheduling model. We defined sterility assurance level of products and the duration required for finishing all batches to be produced as objective functions. Requirements for calculating these two functions are batch information (i.e., the number of batches, batch size, oxidation resistance of product etc.) and decontamination condition (i.e., injection rate of H2O2, relative humidity etc.).

The model was applied to the design case which is 10 batch production of three different sets of products. The calculation result revealed that batch information, especially production size and oxidation resistance of product, is critical to the process duration. At most, 18.1% of the duration can be saved by changing decontamination condition and production schedule. In future, more rigorous simultaneous optimization of decontamination processes and scheduling will be performed. We will also develop a design framework for decontamination processes by incorporating the scheduling model.

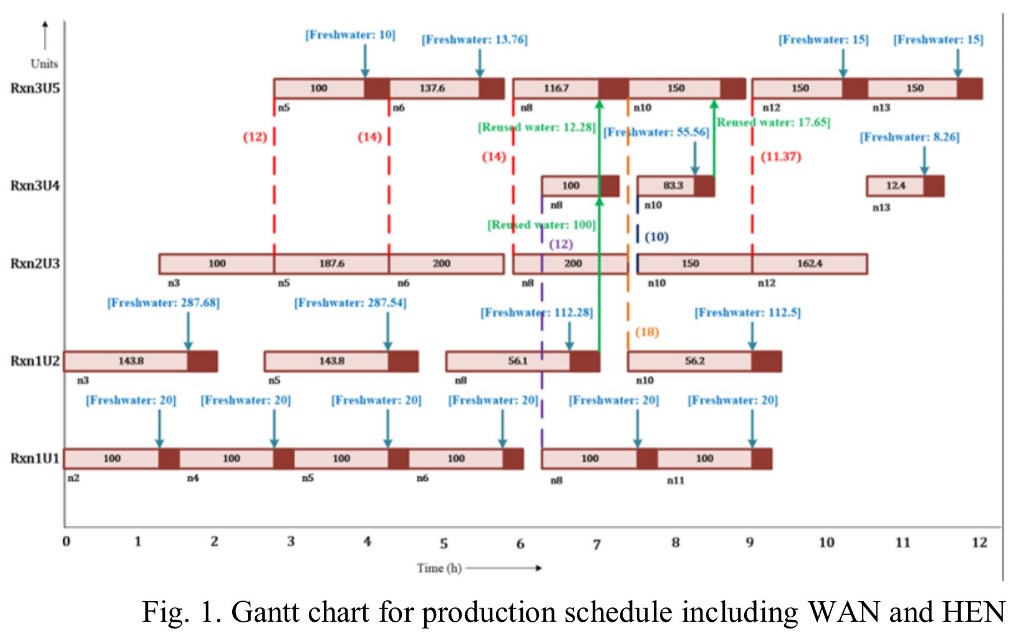

Water integration in batch processes has gained increased attention recently[1-2]. Both freshwater usage and wastewater discharge can be reduced obviously when regeneration reuse is adopted in the design of multi-contaminant water-using networks (WUNs)[3]. It is usually a hard work to design the WUNs involving regeneration reuse, and becomes even worse when batch processes are considered. The paper presents a simple and systematical design approach to manually generate batch water-using networks with multiple contaminants and regeneration reuse. It is assumed that the network runs in cyclic mode and the regeneration unit in continuous mode. The design objectives are to minimize the freshwater usage and wastewater discharge, regeneration flowrate and the costs of storage tanks. The proposed design approach consists of two stages: the batch WUNs are firstly treated as a continuously ones. The concepts of concentration potentials and a modified iterative method are adopted to determine the concentration for regeneration and guide the network design[3] on the first stage. Then, heuristics rules are proposed to guide the design of intermediate storage tanks under the assumption that wastewater can be reused between adjacent batches. The effectiveness of the proposed method is illustrated by two examples from literatures, as good as or even better networks can be obtained.

[1] Li A.; Liu C.; Liu Z. A heuristic design method for batch water-using networks of multiple contaminants with regeneration unit, Chinese J. Chem. Eng. 2019, https://doi.org/10.1016/j.cjche.2018.10.018.

[2] Li, B.H; Liang, Y.-K.; Chang, C.-T. Manual design strategies for multi-contaminant water-using networks in batch processes. Ind. Eng. Chem. Res., 2013, 52(5):1970–1981

[3] Pan C.; Shi J.; Liu Z.Y. An iterative method for design of water-using networks with regeneration recycling. AIChE J. 2012, 58(2):456-465.

Chromolaena odorata L. (C. odorata) with several common names i.e. Siam weed, Bitter bush, Christmas bush, Devil weed, and Camphor grass, is a weed that found in tropical continents. Leaves, roots and flowers of C. odorata have been used as medicinal plants for centuries. Preliminary phytochemical screening showed the chemical composition of C. odorata extracts having phenolic compounds such as flavonoids, saponins, tannins and steroids. The total phenolic compounds (TPC) in medicinal plants are largely acknowledged as biochemical activities such as anti-inflammatory, antiviral, antimicrobial, anti-mutagenic, anti carcinogenic and especially antioxidant agents. This work is interested in antioxidant and antimicrobial activity of an ethanolic extract of C. Odorata leaves. Though, it is found that many solvents can be used to extract TPC from plants. Ethanol with ultrasound assisted extraction was selected. The response surface methodology coupled with the nonlinear solver were used to find the optimal extraction variables. The Central Composite Design (CCD) was employed as the sampling technique. Three studied variables are the ratio of ethanol to the dried leaves of C. odorata (X1), aqueous ethanol concentration (X2), and the extraction time (X3). The extraction conditions were fixed at 40 kHz and 60 °C. It is found that the maximum yield of TPC was 96.49 mg GAE/g dry extract at 57 % v/v of aqueous ethanol concentration, 43 mL of solvent/g of dried sample and 35 minutes extraction time. The relationship between yield and variables is shown below with the prediction error of 13%:

Yield of TPC = 32.9036 – 8.3032 X1 + 6.4377 X2 – 8.7779 X3 + 8.5326 X22

Nowadays, demand of natural dye has increased due to harmful effects of waste from synthetic dyeing to environment and living organisms in water resources. Some synthetic dyes might cause in skin irritation. Natural dye is therefore coming to a better choice for natural fabric dyeing. Teak or Tectona grandis is a tropical hardwood tree. Its weather resistance and beautiful surface made teak as a valuable wood for outdoor furniture and house construction. Moreover, teak leaves are used in food and vegetable wrapping in rural area and some might fall down and waste them as fertilizer for soil. One of the methods to extract natural dyes from teak leaves is ultrasonic assisted extraction (UAE). The yield of natural dye obtained from this method depends on type of solvents and extraction conditions. This research studied optimization of ultrasonic assisted extraction of natural dye from teak leaves. The response surface methodology coupled with the nonlinear solver was employed to find the maximum yield of natural dye. The response surface methodology gives the model represented relationship between extraction parameters and the natural dye yield. Then, the nonlinear solver was used to solve the model for the maximum yield of natural dye. Two different solvents used in extraction, which are water and ethanol, were studied. For each and mixed solvents, the considered parameters in the optimization were frequency of ultrasound (x1), ratio of solvent volume to dried leaves (x2), extraction temperature (x3), and extraction time (x4). The model represented relationship between extraction parameters and the natural dye yield was obtained for each and mixed solvents. The optimal values of extraction parameters were successfully obtained for both solvent conditions.

This research presents a comparative life cycle assessment (LCA) of three photoluminescent types of organic light emitting diodes (OLED): fluorescent, phosphorescent, and thermally activated delayed fluorescent devices (TADF). OLED is garnering attention as a next generation display technology with high luminance, high resolution, and low power consumption. However, general OLED displays employ fluorescent materials with 25% luminous efficacy or phosphorescent materials with 100% theoretical luminous efficacy containing scarce metals such as iridium. Recent developments have discovered new materials exhibiting 100% luminous efficacy without scarce metal, called TADF materials. To analyze the environmental advantage of this new material development, we comparatively assessed lifecycle greenhouse gas emissions (GHG) of three types of OLED employing distinct materials: fluorescent, phosphorescent containing iridium, and TADF. The functional unit of this LCA is provision of one OLED television display. The system boundary considered raw material extraction to the use phase. The lifecycle inventory of fluorescent materials referred to past studies, and that of phosphorescent and TADF were constructed from patents and publications. Energy demand for the material synthesis and OLED fabrication was estimated from lab scale data to manufacturing scale using the authors' a scale up methodology. As a result, the GHG of the TADF and phosphorescent material synthesis were 4-60 times greater than that of the fluorescent material. The major GHG contributor in these materials were the solvent use. Additionally, there was little difference among the lifecycle GHG of OLED displays employing three materials. Because the amount of emissive materials in an OLED display is in the order of 1 mg, the high energy demand of TADF and phosphorescent materials contributed little to the overall GHG of one display. This study sorely analyzed GHG, but further analysis should consider material criticality, which TADF materials shows advantage over other materials.